How to stop AI deepfakes from sinking society — and science

Protect society and science from the growing threat of AI deepfakes. Learn how to detect and prevent deepfakes, promote media literacy, and explore the role of blockchain data storage. Discover why AI regulations are crucial to combat deepfake crimes and their potential geopolitical impact.

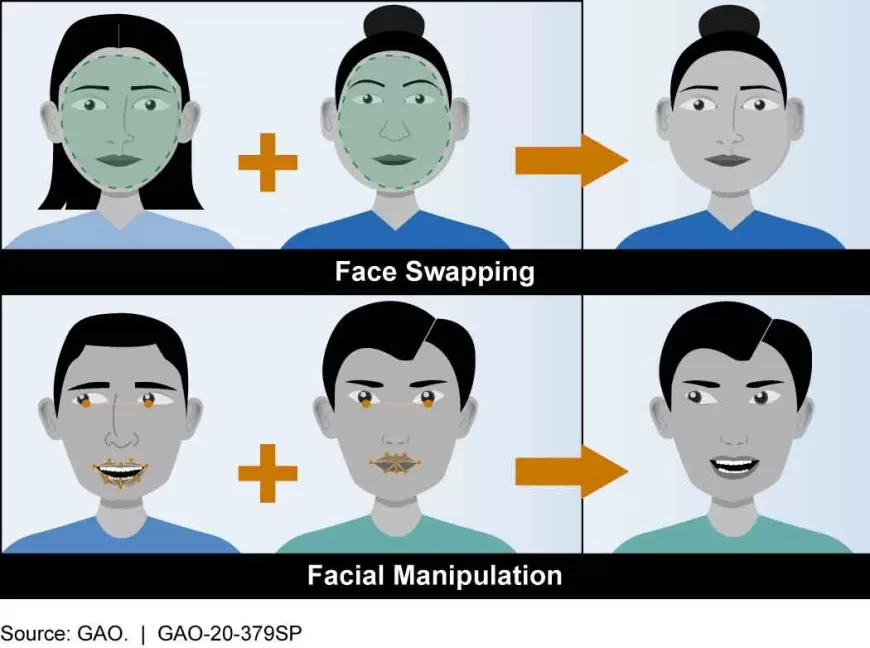

AI deepfakes are a growing threat to society and science. Deepfakes are videos or audio recordings that have been manipulated to make it look or sound like someone is saying or doing something that they never actually said or did. Deepfakes can be used to spread misinformation, damage reputations, and even commit crimes.

Here are some ways to stop AI deepfakes from sinking society and science:

- Invest in deepfake detection technology. Researchers are developing new ways to detect deepfakes, but more investment is needed to make this technology widely available.

- Educate the public about deepfakes. People need to be aware of the dangers of deepfakes and how to spot them.

- Promote media literacy. People need to be able to critically evaluate the information they see and hear.

- Support research on deepfakes. Researchers need to continue to develop new ways to detect and prevent deepfakes.

How blockchain data storage can protect us from deepfakes

Blockchain data storage can be used to protect us from deepfakes by providing a tamper-proof record of digital content. When content is stored on a blockchain, it is distributed across a network of computers, making it very difficult to tamper with.

To use blockchain data storage to protect against deepfakes, content creators could store their original videos on a blockchain. This would make it very difficult for someone to create a deepfake of the video without the creator's knowledge.

In addition, blockchain data storage could be used to create a database of deepfake signatures. This database could be used to detect deepfakes by comparing them to the known signatures.

Why focus on future AI regulations when deepfake crimes persist?

Deepfake crimes are a serious problem, but they are not the only problem associated with AI. AI has the potential to be used for many beneficial purposes, but it also has the potential to be used for harmful purposes.

It is important to start thinking about AI regulations now, before the problem gets worse. By starting to develop AI regulations now, we can help to ensure that AI is used for good and not for harm.

Here are some specific ways that AI regulations can help to combat deepfake crimes:

- Require companies to use deepfake detection technology. Companies that create or distribute deepfakes could be required to use deepfake detection technology to try to prevent the spread of deepfakes.

- Make it illegal to create or distribute deepfakes. Some countries are already considering making it illegal to create or distribute deepfakes. This would help to deter people from creating and distributing deepfakes.

- Increase penalties for deepfake crimes. The penalties for deepfake crimes could be increased to make them more serious. This would help to deter people from committing deepfake crimes.

Deepfakes can cause geopolitical rifts. State should fund detection of manipulated videos

Deepfakes can be used to manipulate public opinion and sow discord between countries. For example, a deepfake could be used to make it look like a political leader is saying something that they never actually said. This could be used to damage the leader's reputation and undermine their authority.

States should fund the development and deployment of deepfake detection technology. This technology could be used to identify and prevent the spread of deepfakes.

In addition, states should educate their citizens about deepfakes. Citizens need to be aware of the dangers of deepfakes and how to spot them.

Deepfakes have the ability to swing elections, erode public trust. Two ways to combat them are detection & provenance. Its looming threat necessitates proactive State intervention

Deepfakes have the potential to swing elections by manipulating public opinion. For example, a deepfake could be used to make it look like a candidate is saying something that they never actually said. This could damage the candidate's reputation and lead voters to vote for their opponent.

Deepfakes can also erode public trust in institutions and leaders. If people are constantly seeing deepfakes, they may start to question the authenticity of everything they see and hear. This can lead to a decline in public trust and make it more difficult for governments and other institutions to function effectively.

There are two main ways to combat deepfakes: detection and provenance. Detection refers to the ability to identify deepfakes. Provenance refers to the ability to track the origin of a video or audio recording.

State intervention is necessary to combat deepfakes because they pose a serious threat to democracy and public trust. States can fund the development and deployment of deepfake detection and provenance technology. They can also educate their citizens about deepfakes and how to spot them.

What's Your Reaction?